General Purpose AI Agents - Building Reliable LLM Agents

We’re developing agentic systems that perform reliably on real world tasks—creating presentations, deployable websites, and research reports—not just benchmarks. To achieve this, we’ve built a technical framework that treats the agent as a full-stack system spanning planning, retrieval, reasoning, tool execution, and verification, and evaluates it end-to-end under realistic conditions. By optimizing against outcome -quality rather than proxy scores, we generate improvements that show up in production.

Our quality suites are derived from actual usage of our platform. Tasks are constructed from the kinds of requests customers make, such as producing a board-ready deck from a short brief and several PDFs, generating a responsive microsite from a design spec, or compiling a literature review with citations and a clean bibliography. Inputs are intentionally messy and specifications can be incomplete, and tools are exercised under realistic latencies and failure modes. We use rubric-driven evaluators specialized to the deliverable type so that the grader’s criteria reflect structure, coverage, and correctness of the artifacts. These LLM-based judgments are combined with programmatic validators like link checks, output length constraints and ground truth expectations where available. We calibrate the rubrics against periodic human ratings on stratified samples and update thresholds to prevent drift while keeping the loop fast enough for continuous iteration.

We evaluate the system end to end. Agents must plan multi-step workflows, decompose and recombine subtasks, and coordinate subagents for synthesis, code generation, retrieval, and verification. Tools are treated as typed, stateful components with explicit contracts; we measure schema conformance, idempotency, retries and timeouts, and targeted recovery when operations fail. Because we measure model behavior and tool fidelity together, we can pinpoint whether bottlenecks arise from prompting, routing policies, or a specific adapter’s implementation.

Inside the agent, we optimize where it matters most. Subtasks like planning, long-form synthesis, code generation, summarization, and factual verification are routed to different foundation models chosen along quality, latency, and cost frontiers. Context is managed with strict token budgeting, hierarchical summaries, and section-aware retrieval so that subagents exchange compact, typed state—plans, constraints, and evidence sets—rather than raw text dumps. Prompts are versioned per task family and use structured output constraints with lightweight scratchpads to preserve reasoning reliability without unnecessary verbosity.

We treat agent development like continuous integration. Every change to prompts, routing, tools, or policies runs through the quality suites with statistical guardrails to prevent regressions. We maintain cost–latency–quality frontiers for common workflows and tune policies to meet practical SLAs, such as bounding p95 latency while maintaining a minimum quality threshold and reasonable cost.

The outcome of this approach is straightforward: higher completion rates on real tasks, fewer unnecessary retries thanks to targeted repair, predictable latency and cost through subtask routing and selective verification, and quality gains that generalize across workflows because they address systemic failure modes like state handoff and tool reliability. Overall, this gives us a robust process for improving agents over time and for leveraging the latest foundation models effectively.

Forecasting and Planning

Forecasting and Planning

Marketing and Sales AI

Marketing and Sales AI

Anomaly Detection

Anomaly Detection

Foundation Models

Foundation Models

Language AI

Language AI

Fraud and Security

Fraud and Security

Structured ML

Structured ML

Vision AI

Vision AI

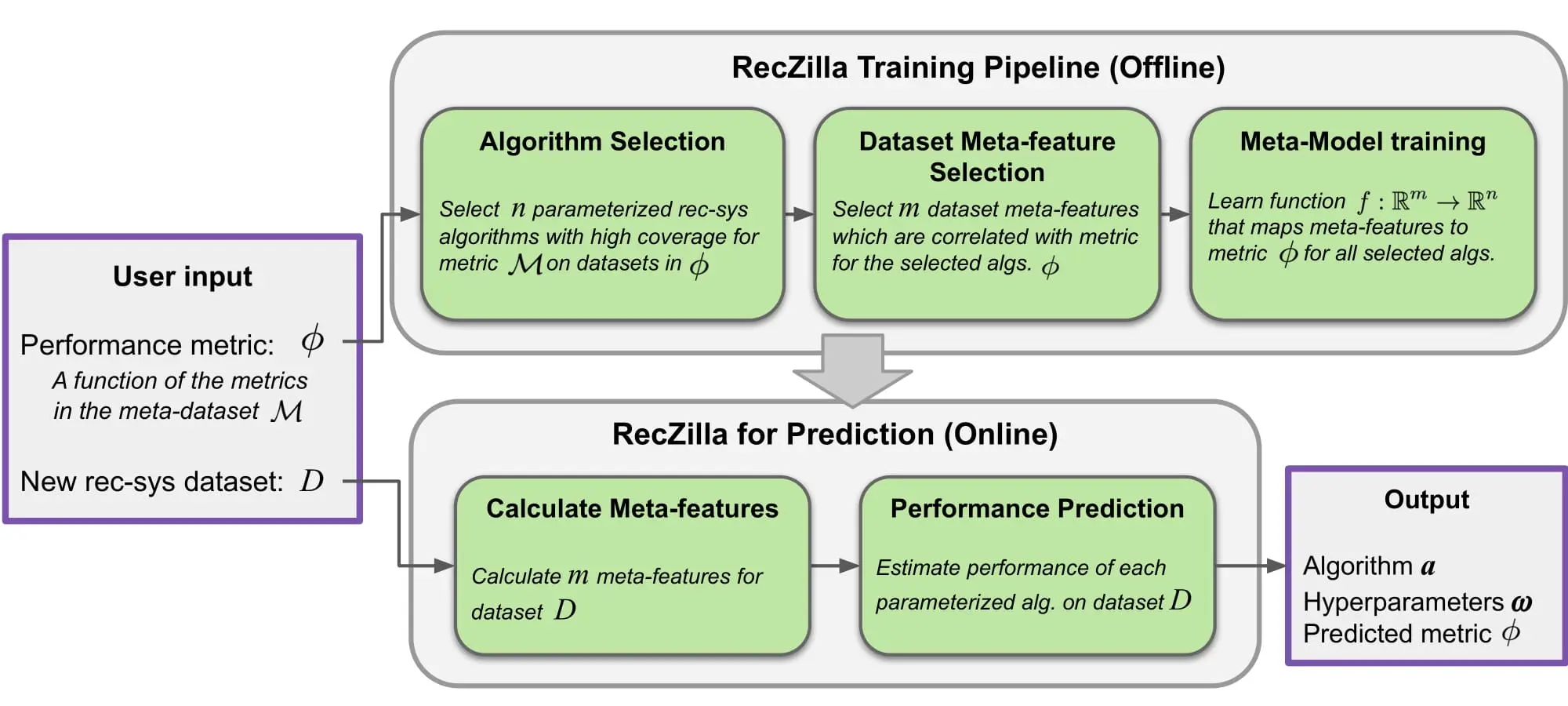

Personalization AI

Personalization AI